Thesis Grants

Each semester, the Digicomlab funds several master thesis projects by students of the (research) master at the department of Communication Science at the University of Amsterdam. The lab funds projects that make use of or study digital methods in an innovative way. Find more information on theses funded by the lab below:

2025 By Jiayi Yan

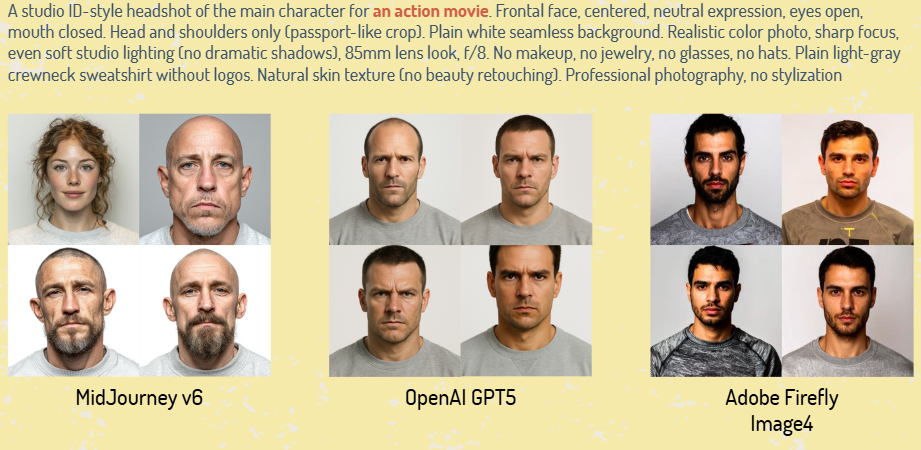

AI systems formalize, classify, and amplify historical forms of discrimination. It’s unsurprising that when generating “action film main characters,” models overwhelmingly produce white, masculine men (see image). This predictability is precisely the problem: as AI-generated imagery surges, accepting these patterns as “common knowledge” allows encoded biases to natrualize into infrastructure. We pursue two questions. First, do AI outputs reflect human preferences? We compare 512 AI-generated images against 128 human-selected preferences from a prior study. Second, what defaults do AI systems encode? Focusing on gender, race/ethnicity, age, and facial features examines how algorithmic systems erase differences while reifying inequalities. Grounded in decolonial AI perspectives and social identity gratifications theory, this research grapples with measuring bias and creating spaces for fair representations.

Semester 2, academic year 2024/2025

By Dongdong Zhu

A major issue with generative AI is the presence of biases, which often carry a negative connotation, suggesting an unfair or unjustified preference for certain ideas or groups (May, 2021). Multiple studies have shown that females are underrepresented in various occupations in AI-generated outputs. However, existing research on gender bias in AI career representations often focuses on a single dimension, such as the proportion of females (Currie et al., 2024) or how their expressions and gestures are depicted (Sun et al., 2024). There is a lack of a comprehensive approach to measuring gender bias, as well as limited investigation into how generative AI compares to real-world cultural biases.

This study aims to explore gender bias in AI-generated image representations of politicians as a specific profession, and to compare these findings with the extensive body of research on gender bias in human-generated representations. It first examines the quantitative differences in the proportion of female politicians across AI-generated, human-generated, and real-world representations. Then, building on the Agency/Communion Model, it explores whether generative AI reproduces or alters human bias, specifically whether female politicians are more likely to be depicted in communal-oriented political domains, while male politicians are more likely to be represented in agentic-oriented domains using automated content analysis.

Semester 2, academic year 2023/2024

By Ellen Linnert

Despite its popularity, YouTube has gained a reputation for fostering toxic communication through its affordances (Alexander, 2018; Munn, 2020). This echoes general concerns that social media sites could hinder deliberative democratic communication processes (Pfetsch, 2020). However, the role of social media in facilitating certain discourse dynamics remains unclear. Algorithms that have the power to structure discourse on a platform and to encourage expressive behaviors by ranking user comments (e.g., YouTube’s “top comment” algorithm) are still greatly understudied.

Specifically divisive expressions – outraged references to one’s partisan out-group within a moral context (e.g., accusing the out-group of violating moral norms) – might fuel social divides. These highly emotional comments that impose a moralization of political conflict can obstruct productive deliberation while also being highly engaging to the platform users (Brady et al., 2017, 2021; Rathje et al., 2021). This poses a potential dilemma for the algorithmic ranking of these comments. Therefore, this study investigates the role of divisive expressions in the algorithmic ranking of and engagement with user comments on YouTube news content.

To tackle challenges of big social media datasets and language processing, the study employs state-of-the-art computational methods using pre-trained large language models. This way, elements of divisive expressions can be detected automatically in large quantities of user comments on US-focused news content. By assessing the relationship between divisive expressions and the algorithmic ranking of user comments on a large scale, this study contributes to the understanding of algorithmic affordances and public discourse online.

Semester 2, academic year 2023/2024

By Yuyao Lu

Climate researchers, positioned as key communicators for climate change, face the challenge of promoting climate actions among the public. With billions engaging on social media every day, this study utilizes this platform for climate researchers to shape public perceptions and actions towards climate change. Leveraging the popularity of short form videos, th e study aims to create a novel online intervention, illustrating climate researchers’ personal behaviors (climate friendly vs. climate unfriendly) and substantiating the impact through empirical evidence.

Our central research question probes the extent to which climate researchers’ behavior influences the public’s efficacy beliefs, perceptions of climate urgency, and subsequently their pro environmental actions. Considering the role of algorithmic recommendation systems in shaping repetitive media consumption, we are particularly interested in the effects of repeated exposures rather than one time intervention. Taken together, we employ a longitudinal experiment with repeated exposures to climate researchers’ behavior, in combination with a between subjects design. This approach allows for an in depth investigation into changes in public perceptions and behaviors over time.

Beyond empirical insights, this study seeks theoretical extensions to behavior change theories, enhancing their applicability to the collective challenge of climate change. Additionally, this study aims to develop a robust climate communication strategy wi th practical implications for science communication. The ultimate goal is to contribute both theoretically and practically, providing valuable insights for effective climate communication strategies

Semester 2, academic year 2023/2024

By Eoghan O’Neill

Constructing an image that resonates with voters is a major focus for any modern political campaign. A recent explosion of visual Communication Science studies reflects this. The bulk of these have considered how candidates visually frame themselves on their personal social media accounts. The sustained importance of the broader news media however means that candidates can never have complete control of their image. The image production process consists of multiple stages within which editors, journalists, and other news producers attempt to influence how the public sees a candidate. With the expansion of digital media, the number of these news producers has diversified but the dynamics of visual framing in this new diversified context is not understood. This paper then explores the visual framing of candidates during the 2020 US election within the context of YouTube. A major source of news for US adults, YouTube is a fitting place to investigate this question as it has a large community of independent and mainstream news producers. Just as shifts in the campaign and media environment require us to pose new questions, so to do they require new methodologies. To adequately process the large amount of visual data posted to YouTube during an election, this paper takes an automated content analysis approach. First, representative frames from videos will be analysed for the presence of the two candidates using Python’s facial recognition library. Then, the manifest elements of visual frames will be analysed using the Contrastive Image Language Processing (CLIP) model.

Semester 1, academic year 2025/2026

By Quyang Zhao

Despite growing attention to diversity, equity, and inclusion, advertisers still overrepresent majority groups while giving minority groups limited or stereotypical visibility. Recent research has begun examining human influencers with diverse identities who strategically present themselves to balance diversity advocacy with commercial endorsement. Virtual influencers have also entered this space, offering new ways to represent and shape diversity through technology.

Although research on diversity representation in influencer marketing is emerging, it remains in its infancy and offers limited insight into the mechanisms underlying audience responses. This study investigates how virtual versus human diverse influencers shape explicit evaluations and implicit associations through parasocial interaction. It also examines whether the positive “halo effect” found for diverse human influencers similarly extends to virtual influencers.

Semester 1, academic year 2025/2026

Amy Doerr

This project investigates the emergence of post truth dynamics within the Subreddit r/politics between 2007 and 2024. We analyze two parallel evolutions: the gradual shift from sharing mainstream news toward alternative media sources and the rising intensity of emotional language. By utilizing a fine tuned SBERT Transformer model, the study detects nuanced changes in sentiment and emotional intensity across millions of user interactions. We explore whether active presidential campaigns drive users away from factual legacy outlets toward ensationalized content. Ultimately, this research illuminates the growing tension between objective information and affective polarization in digital public spheres.

Semester 2, academic year 2023/2024

By Nele Pralat

As AI applications such as ChatGPT or MetaAI continue to advance and integrate into various online spaces, their touchpoints with daily life are growing with some AI’s offering advice, purchase recommendations and more. Yet, in proportion to AI’s growing presence, there is relatively little research focusing on the implications of us interacting with AI. Understanding the nuances of how we interact with AI as a social actor, particularly with AI as an empathetic entity, remains unexplored. However, this is especially relevant when considering the potential of AI not just as a tool, but as an entity capable of influencing trust and decision-making, that is becoming increasingly more challenging to distinguish from human interaction partners.

Displays of empathy have been found to significantly influence trust in interpersonal relationships, whilst trust has been found to positively influence individuals’ patronage intentions. This project explores whether this effect translates into the realm of human-computer communication, by examining the impact of an empathetic AI on patronage intentions toward it, mediated by trust towards the AI’s benevolence and competence.

The project uses two custom AI chatbot interactions, which are created by means of GPT-4 and trained to communicate in either a highly empathetic or non-empathetic manner. Previous studies on conversational agents primarily focused on simulating AI chatbot interactions using basic, pre-scripted dialogues. This research innovates by leveraging advanced machine learning to actively generate empathetic and rational responses, moving beyond mere simulations to actual AI-driven conversations.

Semester 1, academic year 2024/2025

By Yunhua Tan

This project investigates how AI vs Human influencers on Instagram impact social media users’ mental health and health behaviours. Focusing on fitspiration content, it examines their effects on mood, dietary restrictions, and exercise habits. Using the experience sampling method (ESM), participants receive daily fitspiration posts from either an AI or human influencer for one week, with self-reported assessments of mood, diet, exercise, and parasocial relationships. By capturing data in natural environments, this study provides a dynamic understanding of how parasocial bonds and health behaviors evolve. Findings aim to deepen insights into parasocial relationship theory, clarify AI’s role in health communication, and inform social media-based public health strategies.

Semester 1, academic year 2024/2025

By Wenwen Guo

Currently, there are an estimated 1.3 billion (16%) of the global population experiencing significant disability (WHO, 2024). However, most disabled people remain unheard and under-represented: Research on the disabled group in the Paralympic Games have only begun to receive attention in the past 20 years (Jefferies et al., 2012), even within the limited reach, most of them depicts impairment features instead of athlete achievements and performance (Auslander & Gold, 1999; Hardin & Hardin, 2004; Schantz & Gilbert, 2001), resulting in biases and passive misunderstandings on this marginalized group.

The Paralympic Games provides an open platform for worldwide athletes with various types of disabilities to unite and compete to their body limits (Rojas-Torrijos & Ramon, 2021). The game aims to promote inclusion and awareness on the disabled population, emphasize the athletic achievements of marginalized groups worldwide, and motivate achievement and persistence for all (Ersöz & Esen, 2023). The event is also actively reported on media outlets in the United States, the United Kingdom, Canada, and China (Boykoff, 2024; Solves et al., 2019). Reports are widely seen on the Washington Post (Lee, 2013), BBC (Buysse & Borcherding, 2010; Lee, 2013; Pullen & Silk, 2020), CBC (Antunovic et al., 2024; Quinn & Yoshida, 2016), and China Daily (Buysse & Borcherding, 2010; Cheong et al., 2021). These reports reflect the widespread interest of professional news outlets and policy makers on the international disability issue and their aims to frame public opinion through their wide reach of audience.

DePauw (1997) introduced three frames for representing marginalized groups in sport reporting, namely physicality, masculinity, and sexuality. This study investigates the existence of the above-mentioned frames on four professional news outlets (from US, UK, Canada, and China) when reporting the disabled groups in the Paralympic games. The study uses an automated content analysis method to answer framing differences across different games and countries, and the moderating role of countries acting as hosts.

Semester 2, academic year 2023/2024

By Yilan Wang

Conversational agents, with the application of advanced Artificial Intelligence and Natural Language Processing technologies, are becoming increasingly accessible and prevalent in real life. Over the past few years, they have undergone phenomenal progress. The progress has further brought a massive, promising prospect for online marketing practices, because it satisfies the consumer need of being involved in conversational marketing activities.

Although the field of developing and employing conversational agents is emerging rapidly, there is not much communication research examining the relevant media effects. The following research aims to bring insights into the persuasive effects of conversational agents from the perspectives of consumer psychology and marketing practices. Particularly, it tests how distinct types of decisional guidance (suggestive guidance: providing direct advice about purchase decisions vs. informative guidance: only providing pertinent product information) provided by a conversational agent will influence the purchase intention of consumer decision making. Additionally, the research investigates the moderation role of consumer scepticism in this persuasion process.

The research is conducted through a lab experiment with 2x2 between-subjects factorial design in the university lab. Participants are recruited from students at the University of Amsterdam. They are instructed to interact with one of two chatbots in a scenario of exploring restaurants that are worth visiting in Amsterdam, which is innovative since interactivity brings more reality than pure scenario designs. The chatbots are trained with pre-determined datasets with the same codes, and the experimental conditions only differ in the text output (i.e., whether explicit recommendation is provided).

Semester 2, academic year 2024/2025

By Jaemin Daniela An

The recent years showed a rapid growth in the use of artificial intelligence (AI) in various areas, such as recruiting, healthcare and marketing. With the increasing use of AI in gathering information and decision-making, concerns arise regarding potential biases embedded in AI systems that might result in unfair outcomes. Contrary to the common belief that algorithms are objective, AI systems can introduce biases influenced by human cognitive biases that might reflect and further amplify existing social inequalities and harmful stereotypes. This disproportionally affects marginalized groups.

On the other hand, previous studies also show that human judgement is inherently flawed and prone to biases, whether intentional or unconscious. With this argument, practitioners have utilized AI systems to assist or even substitute human decision-making with the aim to increase objectivity.

This debate around the question whether humans or AI show stronger biases is not new and existing research shows evidence for both directions. This research project therefore aims to provide more insight into if and how social biases differ between humans and AI. Specifically, the focus lies on gender and age biases as well as their intersectional bias where both age and gender bias overlap. To assess implicit biases in the context of occupational roles, the visual wiki survey tool PictoPercept will be used for both groups. This innovative method addresses existing methodological challenges in measuring implicit biases, for instance by enhancing accessibility through visual stimuli and mitigating social desirability effects.

Semester 1, academic year 2024/2025

By Qiru Huo

Adolescents’ self-presentation on social media is closely linked to their well-being, though current research indicates that the relationship is complex, with both positive and negative effects. To better understand this relationship, more research is needed to explore specific social media features and content shared. This study focuses primarily on two key features on Instagram: stories, which represent ephemerality affordance, and posts, which represent persistence affordance. In terms of content, the concept of capitalization explains how different sentimental self-presentations on social media influence users’ well-being, as people tend to feel more positive when sharing positive content and vice versa. This study aims to investigate the sentiment distribution in posts and stories shared by adolescents on Instagram and explore how sharing content with varying sentiments impacts their subjective well-being at both the between-person and within-person levels.

This study adopts an innovative mixed method, combining the data donation method with the experience sampling method, using data from a large-scale intensive longitudinal cohort project that has already completed data collection. The data donation method enables measuring participants’ objective social media use by providing access to the specific content of published posts and stories. Multimodal sentiment analysis, using pre-trained and fine-tuned models, will categorize the sentiment of numerous posts and stories included in the data download packages (DDPs). After the sentiment classification of posts and stories, subjective well-being data collected via experience sampling will be incorporated to analyze the short-term impact of sharing content with varying sentiments on adolescents’ well-being.

Semester 2, academic year 2023/2024

By Paul Ballot

Advancements in generative Artificial Intelligence (AI) and the emergence of Large Language Models (LLMs) are fuelling fears over the rise of personalised mis- and disinformation on an industrial scale. However, this potential for the mass production of synthetic “fake news” may not be the only cause for concern: Recent findings indicate, that AI generated disinformation could also be more difficult to detect for human raters and possibly even automated classifiers. This could result from the prevalence of news content in the training data, allowing LLMs to imitate linguistic patterns attributed to actual news, while maintaining the semantics of misinformation. Hence, a key contribution of this paper is its attempt to understand why AI generated disinformation possesses greater credibility than its conventional counterpart and whether this, in turn, could reduce the effectiveness of media literacy interventions on disinformation. To test for these hypotheses, we analyse, to what degree synthetic disinformation resembles traditional news and human-authored disinformation regarding various linguistic features. Furthermore, running an experiment, we evaluate, whether generic inoculation remains effective in increasing accuracy for synthetic disinformation. Synthezising insights from various methods, we thereby hope to illuminate the risks associated with LLMs while showcasing the potential of combining computational content analysis and experimentation.

Semester 1, academic year 2024/2025

By Haochuan Liu

This study aims to perform a large-scale automated content analysis via fine-tuned BERT model to compare the differences in journalistic objectivity between fake news genre texts and texts from mainstream media.By capturing differences in objectivity, This study highlights the characteristics of fake news as a genre and illustrates how it deviates from traditional journalistic conventions, also provides theoretically grounded features for the automatic detection of fake news. The study measures two theoretical components of objectivity—factuality and impartiality—to form a comprehensive assessment. The former refers to the extent to which an article presents facts without opinions, while the latter evaluates whether the article maintains a neutral stance toward different political parties.

Semester 2, academic year 2023/2024

By Xinfeng Gu

This study aims to explore how two emerging platforms in the digital age influence cultural hierarchies. First, the streaming media platforms have become increasingly important in people’s lives given its unprecedented capacity to popularize and globalize cultural products, yet debate remains surrounding its role as a democratizing force within the culture sphere. Second, the rise of social media platforms has challenged the elite discourse that was historically upheld by elite journalism in the field of culture, while the extent and trends are difficult to quantify. These shifts prompt an inquiry into the difference in changing discourse between journalism and social media platform in the last two decades, and how the enlarging or narrowing of the discursive gap—serving as a representative of cultural hierarchy—relates to the streaming media platform. In this study, an automated content analysis will be conducted on music reviews from art journalism and online forum. Utilizing Concept Mover’s Distance (CMD) to gauge the concept engagement, we will measure the discourse of each music review along two conceptual dimensions—legitimacy and gender—in a word-embedding space. To validate the accuracy of the approach in measuring concepts, human coders will manually code a portion of the data. Upon validation, CMD will be computed for the entire dataset. Subsequently, the relationship between characteristics at both news agency level and music streaming platform level and the discourse gap in legitimacy and gender will be examined by performing linear regression and multilevel regression analyses.

Semester 2, academic year 2024/2025

By Qianyi Wang

The globalized film industry provides a space for diverse cultural narratives to interact, yet existing power structures disproportionately favor productions from the Global North (Hollywood as a prime example), marginalizing other voices. This study examines structural inequalities in the globalized film industry by analyzing how films from the Global South and North are produced and consumed. Through automated content analysis on a large-scale dataset collected from public sources such as The Movie Database (TMDb), the study addresses these questions: (1) How does production origin (Global South vs. North) shape film distribution, audience consumption, and thematic content of films in an increasingly globalized film market? (2) To what extent has the global film market diversified in terms of production origin, distribution and audience consumption between 2000 and 2024?

This study analyzes metadata on countries of film productions, distribution patterns, global revenues, and narrative content across a 25-year period. It first investigates the dynamic relationship between production origins and film distribution, audience consumption, then applies topic modeling to compare dominant themes in films from the Globa South and North. By combining quantitative methods with a critical, postcolonial lens, this study offers empirical insights into media inequalities at a global scale. The findings will allow critical assessment on how globalization reinforces or challenges existing hegemonies, contributing to discussions on media diversity and representation.

Semester 1, academic year 2023/2024

By Bruno Nadalic Sotic

This thesis explores how visual elements within news headlines influence audience engagement. It specifically investigates the presence of news values in images and their impact on user interactions with news articles, a topic previously understudied in communication science. The research utilizes a comprehensive dataset of user behavior logs from the Microsoft News platform, encompassing both click and non-click activities, to shed light on the dynamics of news consumption.

The study employs machine vision techniques via commercially available APIs. These are used to systematically convert visual elements of news headline imagery into textual representations. This approach allows for a detailed analysis of ‘image features’—such as the presence of notable figures, emotional expressions, or unusual scenes—and their alignment with traditional news values like prominence, novelty, and emotional impact. By doing so, this study attempts to validate to what extent we can measure news values using automated visual content analysis.

The study applies a combination of supervised and unsupervised machine learning methods to correlate said image features with news value factors, and subsequently quantify their influence on user engagement metrics. This approach provides a measurable framework for understanding how visuals affect news engagement but also extends news value theory to the visual domain.

Semester 2, academic year 2024/2025

By Xiaoxiao Wang

The rapid advancement of artificial intelligence (AI) enables AI agents to take on various communicative roles, including robot journalists, AI content writers, and virtual composers. Although previous research has provided valuable insights into public perceptions, attitudes, and behaviors toward AI in these domains, little is known about audiences’ perceptions of AI as an entertainment content creator, particularly in video production. This study examines whether AI-generated short entertainment videos elicit different levels of engagement, liking, and perceived credibility compared to human-created videos. Additionally, it explores whether these effects stem from the actual source of the video (AI vs. human) or the perceived source based on labeling. Building on Construal Level Theory (Trope & Liberman, 2010), this study further investigates whether psychological distance (high vs. low) in video content moderates audience responses to AI- vs. human-generated videos. A lab experiment is conducted to analyze these differences, employing computational decision-making models and linear mixed models. Accordingly, the study addresses the following research questions: To what extent do AI-generated entertainment videos elicit different levels of user engagement, liking, and perceived credibility compared to human-created entertainment videos? Does psychological distance (high vs. low) moderate how people engage with and evaluate AI- versus human-generated entertainment videos?

Semester 2, academic year 2024/2025

By Xinkangrui Gao

Cancer-related conspiracy beliefs can hinder timely treatment and damage trust between patients and healthcare providers. This study investigates how artificial intelligence (AI) chatbots can be used to counter such beliefs. It addresses two key research questions: (1) Are chatbots more effective than traditional health websites in reducing individuals’ conspiracy beliefs about cancer? and (2) How does the expression of empathy by chatbots influence their persuasive effectiveness? A between-subjects experiment compares three conditions: exposed to a cancer information website, interacting with an empathetic chatbot, and interacting with a non-empathetic chatbot. The chatbot is custom-built using Llama 3 and integrated with a retrieval-augmented generation (RAG) system. It provides real-time responses based on a locally stored knowledge base, compiled by scraping cancer-related information from authoritative health websites. This design enables dynamic, content-grounded human-AI interaction and allows for a controlled comparison of communication modes and emotional tone. Theoretically, the study is informed by the HAII-TIME framework and examines how chatbot empathy may function as an interface cue in human-AI interaction. Practically, it contributes to the development of scalable, evidence-based interventions that leverage AI to combat health misinformation in cancer care.