2025 By Jiayi Yan

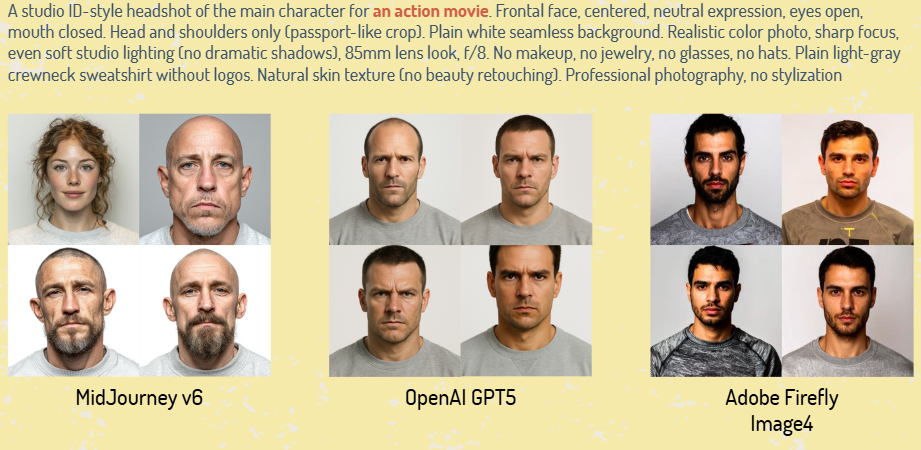

AI systems formalize, classify, and amplify historical forms of discrimination. It’s unsurprising that when generating “action film main characters,” models overwhelmingly produce white, masculine men (see image). This predictability is precisely the problem: as AI-generated imagery surges, accepting these patterns as “common knowledge” allows encoded biases to natrualize into infrastructure. We pursue two questions. First, do AI outputs reflect human preferences? We compare 512 AI-generated images against 128 human-selected preferences from a prior study. Second, what defaults do AI systems encode? Focusing on gender, race/ethnicity, age, and facial features examines how algorithmic systems erase differences while reifying inequalities. Grounded in decolonial AI perspectives and social identity gratifications theory, this research grapples with measuring bias and creating spaces for fair representations.